Assets

Exchange

Buy Crypto

Products

AI model debates usually happen on Twitter, in benchmarks, or inside product reviews. But a different signal is emerging elsewhere: prediction markets. On platforms like Polymarket, traders are putting real money behind their expectations of which AI model will come out on top. This article is not about reviews or leaderboards — it’s about how capital is positioning itself around ChatGPT, Claude, and Gemini, and what that reveals beyond perception.

As AI systems became central to productivity, search, and enterprise software, the question of the “best AI model” stopped being academic. It turned into a forward-looking bet on adoption, distribution, and long-term dominance. Benchmarks measure past performance, but markets trade expectations about the future.

Prediction markets provide a different lens. Instead of asking which model looks strongest today, they ask which outcome will matter tomorrow — rankings, adoption signals, or benchmark wins defined in advance. In this context, AI predictions shift from opinion to probability, where expectations are weighted by capital rather than confidence.

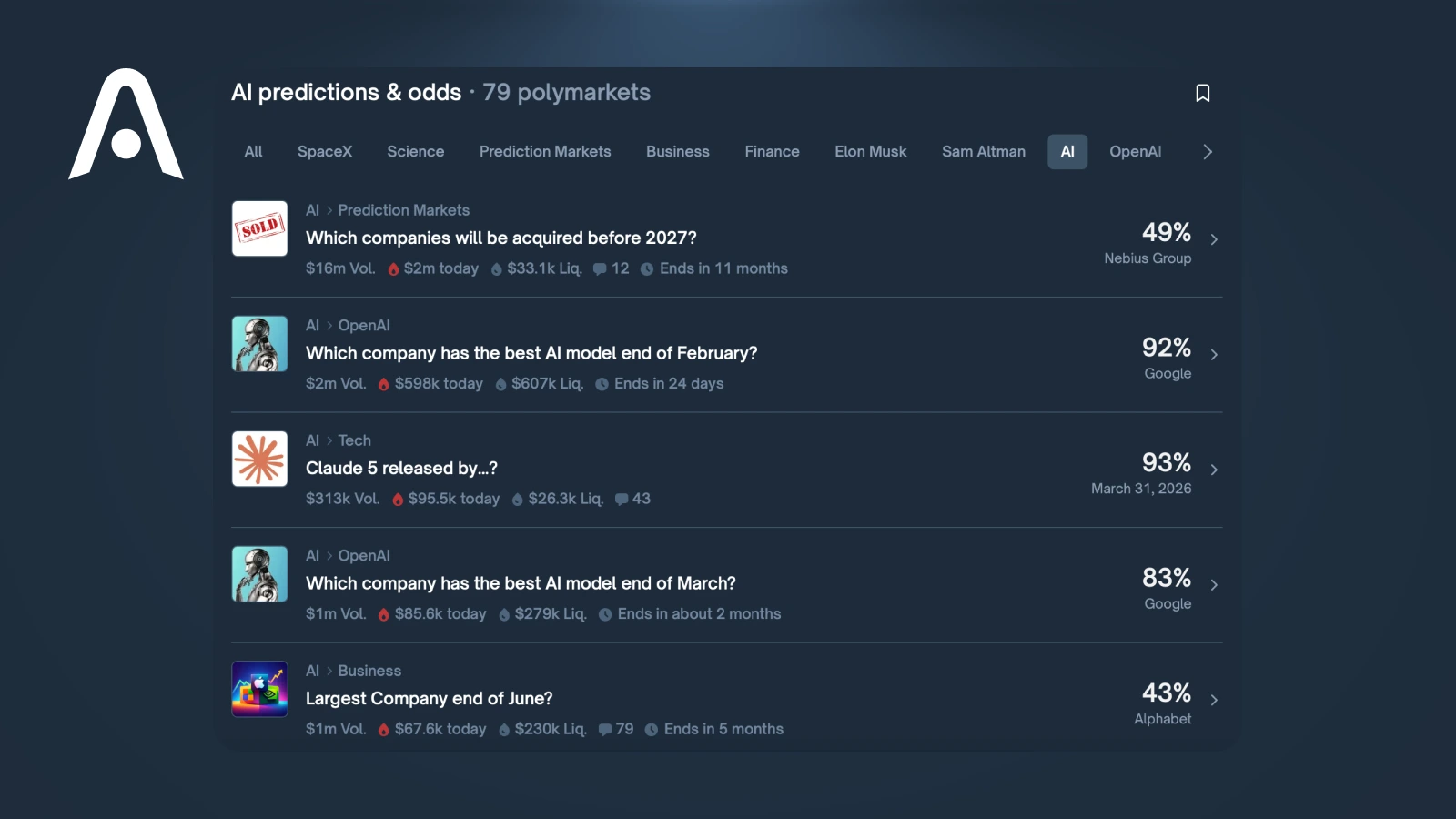

Right now, AI-related markets on Polymarket focus on comparative outcomes rather than abstract quality. Traders are not betting on which model feels smartest, but on which one will be recognized as “best” under predefined criteria.

This makes the markets less about technical elegance and more about expectations around distribution, momentum, and institutional adoption.

AI prediction markets are resolved by rules, not opinions. Winning does not depend on which model users personally prefer or which one feels most capable in practice.

Because of this, understanding how a market resolves is often more important than understanding AI performance itself.

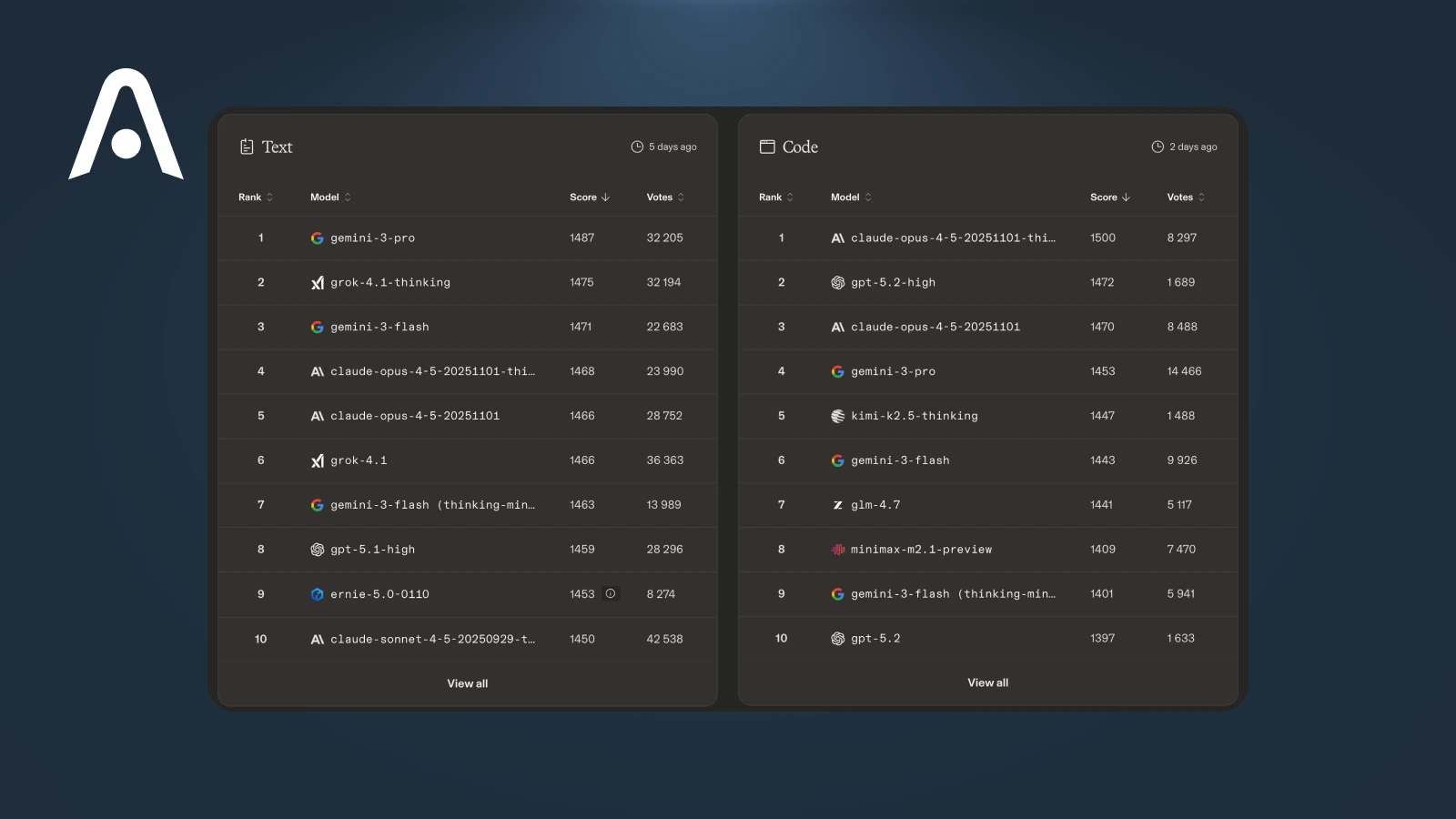

Most AI markets on Polymarket resolve using external benchmarks or published rankings, not subjective consensus. Common resolution sources include LMArena, SWE-bench, and clearly specified public rankings or announcements.

These sources are lagging by design. They capture results after evaluations conclude, not real-time progress. That’s why market wording matters: a small change in which benchmark or cutoff date is referenced can decide the outcome, regardless of how models feel in day-to-day use.

ChatGPT remains the narrative leader. It dominates mindshare, sets expectations for “what AI can do,” and benefits from a strong developer ecosystem. That perception often translates into early probability weight in markets.

But smart money can hesitate. A closed ecosystem, controlled release cadence, and uncertainty around long-term distribution can temper conviction. In prediction markets, dominance in conversation doesn’t always equal dominance in outcomes—especially when resolution criteria reward distribution reach or benchmark recognition over qualitative experience.

Claude is widely respected for careful reasoning, long-context handling, and restraint around uncertainty. Among practitioners, it often scores highly on qualitative judgment and safety-aware outputs.

Markets, however, tend to price outcomes rather than craftsmanship. Limited consumer distribution, lower brand visibility, and fewer headline moments can translate into a weaker market signal. In prediction markets, strong reasoning alone doesn’t guarantee probability weight if the resolution favors visibility, rankings, or broad adoption indicators.

Despite mixed sentiment in online AI debates, markets often assign Gemini a surprisingly high probability. This isn’t about elegance—it’s about leverage.

Prediction markets tend to price power and reach over polish. When outcomes are tied to rankings, visibility, or institutional adoption, distribution can outweigh perceived model quality—explaining why capital may flow toward Gemini even when benchmarks spark debate.

AI outcomes are judged through multiple lenses, and those lenses often point in different directions. Benchmarks measure technical performance, public discourse shapes perception, and prediction markets reveal where capital is actually being allocated.

AI prediction markets don’t reveal truth in an absolute sense. They reveal expectations under constraints. Prices show how traders collectively weigh benchmarks, brand power, timing, and institutional behavior.

Because capital is at risk, these markets often move before headlines and ahead of consensus narratives. When markets shift, it usually reflects a change in expectations about resolution criteria or distribution dynamics—not a sudden reassessment of model intelligence.

You can’t bet on an AI model in the abstract. On Polymarket, traders don’t wager on “which AI is smartest,” but on specific, verifiable outcomes tied to rankings, benchmarks, or public recognition.

This distinction matters. Markets trade events, not opinions. When someone says they’re “betting on Gemini” or “betting against ChatGPT,” what they’re really doing is taking a position on how an external authority or benchmark will rank models by a certain date. Understanding that difference is critical to interpreting market prices correctly.

Experienced traders don’t treat AI and prediction markets as competing tools. They combine them.

In practice, AI supports thinking, while prediction markets test those ideas against capital-weighted expectations.

AI forecasting in 2026 is no longer about finding the single “best AI model.” It’s about understanding how expectations form and where capital moves. Language models excel at reasoning, explanation, and scenario building, but prediction markets add a missing layer: accountability through price.

The most reliable signal emerges when both are used together. AI helps interpret complex systems and potential outcomes, while markets translate those ideas into probabilities weighted by risk. In that sense, the future of forecasting isn’t AI versus markets — it’s AI informing decisions that markets ultimately validate or reject.

AI models are powerful tools for reasoning, but prediction markets show where expectations are strong enough for people to risk capital. When forecasts move from text to price, probabilities become clearer — and more accountable.

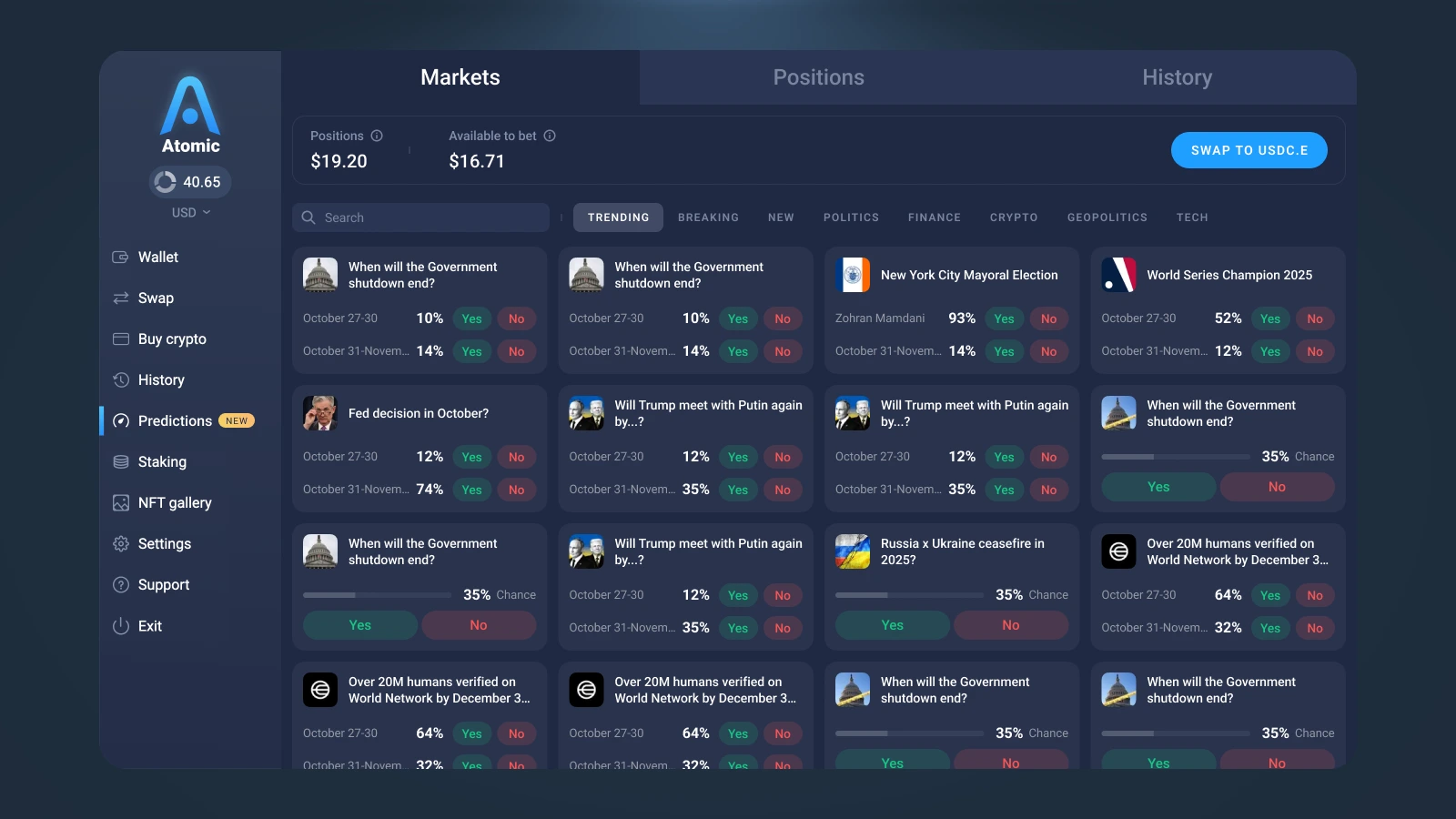

Atomic Predictions ecosystem is built around this idea: using market-based signals, not just narratives. With USDC and self-custody at the foundation, it gives users a neutral way to explore how capital is positioning itself around events like AI model rankings — without turning opinions into advice.

Learn what EdgeX and EDGE Chain are, how the 2026 EDGE token launch works, native USDC integration, XP incentives, and why trading-focused chains are rising in crypto derivatives.